Artificial Intelligence (AI) has swiftly infiltrated nearly every sector imaginable, and the healthcare industry is no exception. While AI’s potential to revolutionize the medical field is undeniable, it has sparked heated debates among healthcare professionals. Can these intelligent algorithms completely replace doctors, or will they serve as assistants, improving efficiency while physicians maintain the lead? To answer these questions, we’ll explore what the medical community thinks about AI, how it’s currently being used in medicine, and whether or not it will replace human doctors anytime soon.

How is AI used in medicine today?

Whether physicians like it or not, AI is already transforming healthcare, tackling everything from diagnostics to administrative workflows. According to insights from a recent Sermo poll, physicians are using AI at varying levels in their daily lives. While 41% of respondents use AI for basic tasks like image searching, only 7% have fully integrated AI into their practices, and 21% are terrified or opposed to using AI, often citing its current privacy and trust limitations. Still, AI’s presence in healthcare is significant and expanding across multiple domains.

Where AI is already making an impact in healthcare

Current AI technology excels in areas requiring data analysis and pattern recognition. For example:

- Medical diagnostics: AI algorithms have demonstrated accuracy comparable to that of trained radiologists in some imaging tasks, especially within controlled research settings, identifying conditions such as cancer, heart disease, and neurological disorders. However, generalizability to real-world clinical practice is still under investigation. Research highlights how AI tools expedite diagnosis while minimizing human error in oversight, especially for complex or hard-to-catch cases.

- Personalized treatment plans: using predictive analytics, AI in healthcare helps tailor treatments to individual patients.

- Administrative simplifications: AI excels most at replacing repetitive tasks such as appointment scheduling, billing, and coding, helping to take administrative burdens off physicians’ plates.

- Virtual assistance: AI chatbots can address patient inquiries and common questions, helping reduce the workload on healthcare providers and staff. This is summarized eloquently by a General Practitioner on Sermo, who explains, “AI can assist clinicians in taking a more comprehensive approach to disease management, better coordinate care plans, and help patients to better manage and comply with their long-term treatment programs, in addition to helping providers identify chronically ill individuals who may be at risk of an adverse episode.”

Nonetheless, as one Sermo member and family physician states, “Medicine today has already taken a path of more reliance on the computer than the patient – personal interactions are going away, leaving out addressing the full range of patient concerns and other issues outside the primary problem. This is already happening due to the https://www.sermo.com/resources/ai-deskilling/restricted appointment time of 15 to 20 minutes per patient and the computer entries required these days. The in-person interactions are already disappearing, leading too often to incomplete evaluations and mistaken diagnoses. AI certainly has some uses, but it cannot replace the physician.”

When Sermo surveyed physicians about AI’s potential roles in healthcare:

- 46% of respondents saw its value as an administrative tool, such as a scribe, to reduce paperwork.

- Only 17% believe it could make meaningful clinical suggestions, showing its scope remains limited.

- 16% believe AI could improve reimbursement by making suggestions regarding billing and coding.

- 13% say AI could help with scheduling, but nothing else.

While many recognize AI’s strengths, concerns about it replacing physicians or reducing the quality of care are pervasive. Reflecting this is the opinion of one neurosurgeon on Sermo who writes, “I believe that there are positives and negatives in using AI. One negative for physicians and patients is that, as it becomes more powerful for diagnosing, it can be used more by NPs and PAs, and make them perceived as the same as physicians. Insurance companies could use them to replace many physicians, more than they do now. It could also decrease the number of surgeons that are needed. They could see patients in the office and make surgical decisions, more than they currently do.”

Why AI won’t replace doctors

The fear of replacement by AI in the healthcare community is rampant. A recent Sermo poll reveals that 58% of physicians believe AI will change the face of healthcare, either diminishing the physician’s role or making doctors obsolete.

However, this article is here to shine a light on the truth of the matter. Even with AI models rapid advancements, there are several reasons doctors remain irreplaceable in patient care. Medicine is not just about data-driven decisions; it encompasses human empathy, cross-cultural communication, clinical judgment, and the ability to manage complex, nuanced situations.

The human touch in medicine

Healthcare thrives on trust and communication—elements that machines can’t replicate. Despite AI’s prowess, 42% of physicians polled by Sermo believe their roles will endure. Why? Because people will always value empathy and human-to-human interaction in healthcare. A study on trust explains that patients are more likely to follow treatment recommendations when they feel emotionally connected to their doctors.

Take this insight from a general surgeon in the UK: “So much of medicine is an ‘art’ rather than pure science. It will be difficult for AI to pick up on all of the subliminal, particularly non-verbal messages that human clinicians collect subconsciously.” Another family medicine doctor in the U.S.writes on Sermo, “I think AI will be utilized by some, but the doctor-patient relationship and the ‘art’ of medicine will not succumb to AI.”

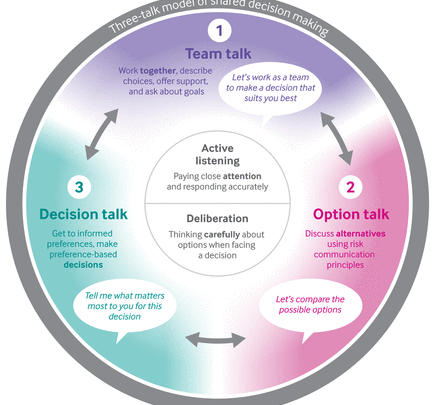

Complex medical decision-making

Effective clinical decision-making encompasses more than just symptoms and test results.

- Conflicting symptoms: human doctors use experience and intuition to prioritize one symptom over another when clinical signs contradict.

- Unspoken cues: non-verbal communication, such as noticing a patient’s hesitation, body language, or emotional distress, is critical in healthcare. Additionally, AI models can suffer from biases that stem from how the AI programs are trained.

- Social determinants of health: treating a condition isn’t just about prescribing medication. Factors like income, housing, or proximity to medical facilities play a role, things no AI algorithm can fully grasp.

- Unpredictability in care: patients’ conditions can swing drastically. Doctors excel at adapting plans in real-time with a realistic view of available resources.

This blends with another perspective from a Sermo survey respondent in Germany, who remarks, “AI will overtake many of my daily professions but will not be able to totally substitute myself.” Beyond diagnosis, physicians offer empathy, manage fear, and guide patients through emotional and ethical complexities, aspects of quality patient care that AI cannot replicate.

Are patients using AI to diagnose themselves?

Patients are already notorious for Googling and asking WebMD about every symptom under the sun. More recently, patients are turning to online AI tools and chatbots before setting foot into a doctor’s office. According to a recent Sermo poll, 47% of physicians cite misdiagnosis or delayed care as their top concern when patients use AI for medical advice. Another 24% worry that AI simply lacks the clinical nuance needed for sound decision-making.

Advanced chatbots and virtual agents are examples of conversational AI in healthcare, offering a more humanized chat experience compared to traditional bots. Patients can use conversational AI to book, cancel, or reschedule appointments, ask about their medication, or obtain details about a diagnosis, all conveniently without needing to call or visit the clinic. Physicians benefit from automated appointment reminders, transcribed patient interactions, and simplified feedback collection. However, the jury is still out on the efficacy of such tools.

An interesting case study on how patients are using AI comes from The British Medical Journal, where patient Hayley Brackley reported losing much of her vision seemingly out of nowhere. She went to a local clinic with eye pain, where a prescribing pharmacist diagnosed sinusitis. She took the recommended medicine, but her vision started to deteriorate rapidly.

Her first step was to ask ChatGPT for advice. The chatbot suggested getting the issue checked, so she did. An optician found significant inflammation and a haemorrhage in her optic nerve, which is now being treated.

It’s no surprise she turned to ChatGPT first. She prefers it over Google because it’s faster and more conversational. She’s not alone—200 million people use this AI chatbot daily. Before her eye appointment, she also used ChatGPT to prepare for potential questions, which boosted her confidence. Brackley, who has ADHD and autism, found this especially helpful.

This raises key questions. Should patients rely on AI tools? How should healthcare systems respond to patients using untested tools? And what does this reveal about gaps in healthcare?

Doctors writing in Scientific American report that AI-powered diagnostic platforms, such as Google’s Med-PaLM, can be highly accurate in controlled environments—aligning with medical and scientific consensus 92.6% of the time, compared to 92.9% for physicians. However, these systems often fall short when dealing with complex or urgent cases because they lack the depth and nuance of human expertise. Additionally, the absence of personal reassurance and the tendency to overlook subtle details can cause unnecessary anxiety, prompting many patients to return to their doctors for further guidance.

Sermo’s poll reflects a mixed bag of patient reactions to AI use in healthcare:

- 32% of patients were curious about AI yet remained neutral.

- 15% of patients report feeling excitement about its possibilities.

- A significant 41% of physicians said AI has yet to come up in their patient discussions.

These numbers suggest that while curiosity exists, AI has yet to establish itself as a trusted diagnostic alternative for patients, and accuracy varies widely across chatbots. To reinforce this point, a pathologist on Sermo explains, “For me, AI is here to stay, and it is a great tool in medical practice. I do not see AI as a threat to medical doctors’ job opportunities. It only has its roles to play. But of concern for me will be clients seeking the help of AI in their health case management without considering the medical doctor’s professional input.”

AI & doctors in patient care

AI doesn’t need to compete with doctors; when implemented with transparency, it should complement them. By reducing mundane tasks and potentially improving diagnostic accuracy, AI empowers clinicians to focus on what matters most: offering the best possible patient care.

Enhancing diagnostic accuracy

Sermo poll insights show 46% of physicians identify improved detection rates as AI’s most significant contribution.

In breast cancer screening, AI identifies patterns and abnormalities in mammograms invisible to the naked eye, flagging tumor-like structures and potentially improving early cancer detection rates. False-positive recalls are a primary concern in cancer screening. However, new multi-modal AI-powered tools trained on half a million mammogram exams have shown promise, cutting recalls by 31.7% and radiologist workload by 43.8% while maintaining 100% sensitivity in the study setting.

It’s no wonder that 57% of U.S. physicians expect AI to become routine in diagnostics across specialties within five years.

Expanding treatment options

AI is expanding treatment options by analyzing large, multi-modal datasets spanning genomics, medical imaging, lab results, and clinical notes to uncover patterns and suggest therapies that traditional methods might miss or could not realistically sift through. A review in the Future Healthcare Journal highlights how this drives precision therapeutics, enabling more tailored care for complex or rare conditions. AI platforms also evaluate immunomic and pharmacological data to identify new treatment options, from optimized medication regimens to targeted clinical trial matches.

Collaborative intelligence—AI working alongside clinicians—amplifies this impact. AI acts as a second opinion, analyzing blood test results and health records to flag diagnoses or therapies. Clinicians then interpret these findings to ensure care stays personalized and safe. By combining AI’s insights with clinicians’ expertise, patients gain access to a broader range of evidence-based treatments, making “what else is possible?” a promising question in healthcare.

Future opportunities for AI and doctor collaboration

Physicians envision AI in healthcare as a highly efficient assistant, one that eliminates drudgery rather than diminishes their importance. As one oncologist and Sermo member put it, “I think AI is great for administrative things or things that are not related directly to medical decisions.”

Future developments in AI and physician collaboration may include:

- Patient monitoring: AI tools can keep track of patients’ vitals and predict adverse events.

- Outcome predictions: leveraging machine learning to personalize treatments based on millions of data points and individual patient history and genomes.

- Administrative automation: AI programs can handle routine paperwork, scheduling, and follow-ups to reduce burnout.

- Medical literature analysis: AI can rapidly scan and synthesize findings from vast volumes of research, helping physicians stay current and generate evidence-based insights for clinical decisions and scholarly writing.

Conclusion

AI in healthcare is a revolutionary force, with the possibility of automating administrative tasks, enhancing diagnostic accuracy, and providing data-driven insights. However, it’s clear that AI will not replace doctors—it will amplify their capabilities. Human judgment, empathy, and adaptability remain beyond digital reach.

At Sermo, conversations about AI in medicine shed light on real-world insights. Every member has something invaluable to contribute—from skeptics to enthusiasts—and every discussion moves the needle forward. Whether you’re exploring AI integration or sharing your practice’s latest success, Sermo’s physician-first platform offers a collaborative space to grow and learn together.

Key takeaways

- AI is an assistant, not a replacement, in the medical field. It is increasingly used in diagnostics, administrative tasks, and treatment planning, but it is incapable of replacing the human judgment and empathy that physicians provide.

- Patients still trust human doctors more. Most patients prefer human interaction regarding sensitive decisions or nuanced care, showing that AI is seen as a tool, not a substitute.

- Physicians are cautiously optimistic. Doctors recognize AI’s potential to reduce workload and improve accuracy, but also express concerns about over-reliance, bias, and ethical risks.

- On Sermo, physicians openly discuss the benefits and drawbacks of AI in medicine, offering real-world insights and challenging the hype with grounded, clinical perspectives.